GenAI's (near) future's (senior) talent shock

Paradoxically, while AI replaces junior-level human work, it will create a senior-level talent shortage in the near future.

Paradoxically, while AI replaces junior-level human work, it will create a senior-level talent shortage in the near future. Organizations must fundamentally rethink their manager-in-training talent strategies or risk a near future shock.

On the one hand, AI will fast replace the need for entry- and mid-level white collar workers, requiring only more senior-level workers to quality control the AI outputs. On the other hand, this AI replacement of junior staff will dry up the career path pipeline by which the next generation of senior-level talent learn through experience the depth and nuance required to perform their quality control roles.

Mark Zuckerberg recently predicted that by 2025, tech companies will replace mid-level coding engineers with AI. “We will get to a point where all the code in our apps and the AI it generates will also be written by AI engineers instead of people engineers.” (Joe Rogan podcast, 2025)1.

My work with advanced generative models suggests Zuckerberg is largely correct. But, there’s a catch.

Every vendor’s vision of AI replacing mundane, repetitive, and structured human work inevitably concludes with a statement to the effect “But humans will oversee the AI agents to ensure their actions are correct.”

Quoting here:

Claude can make mistakes. Please double-check responses.

Gemini can make mistakes, so double-check it

ChatGPT can make mistakes. Check important info.

etc.

Under current and foreseeable GenAI performance this human QA function remains essential. Yet the dizzying pace of improvement suggests that these models will fast become highly reliable, leaving only the most unusual edge cases for humans to catch and correct. Herein lies the organizational and society-wide dilemma.

Managers exist to handle the unanticipated situations. Good managers have learned through years of experience how to identify and handle rare edge case situations. Good middle and senior managers are the safety check to prevent (human or machine) rote processes from doing stupid things. Managers combine both good judgment and a deep, tacit knowledge learned through lived experience as to how their organization works, which allows them to solve edge case problems.

Yet, the talent pipeline for knowledgeable and experienced senior managers is being shut off by AI entry- and mid-level job replacement.

As lower level skilled accountants, supply chain clerks, developers, and HR administrators are increasingly replaced by GenAI tooling, organizations (and the society at large) will be shutting off the managers-in-training talent pipeline. Yet, these middle and senior managers become even more important for catching errors and correcting anomalies because there will be fewer human eyes on machine behaviors.

While new technologies destroy certain occupations, they simultaneously create new occupations. Mass produced automobiles ended the buggy whip maker occupation, but created new roles as assembly line workers, is a favorite trope of futurists. No doubt GenAI will create a new class of jobs.

However, younger workers who lack the experience-based wisdom gained through growing up in the bowels of the business process will not have the domain expertise required to perform the roles of end to end process output quality checkers.

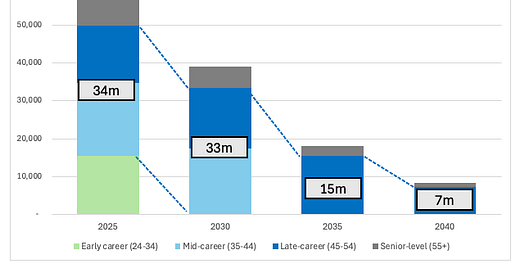

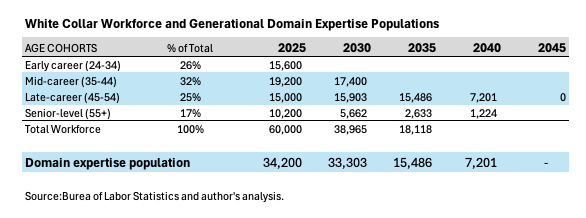

Some back of the envelope math using Bureau of Labor Statistics data2 will illustrate the point. The table and chart below show 80% reduction in the mid- and senior-career workforce over the next 15-years. These data are based on the conservative estimate of 60 million white collar workers in the US by age cohort. If we assume for a moment straight career progressions with neither additional economic disruption nor technology-induced further reductions in the ratio of mid- and late-career staffing, we see a very risky future.

The combination of 2025 late-career managers aging out (retiring) and early career “managers in training” pipeline being shut off will result in a dramatic drop in the population of domain-knowledgeable, experienced, and capable managers as soon as 2035. The future manager talent pipeline will start to dry up within five years.

At this point, who can believably QA the GenAI machines’ outputs?

Who Will Quality Check the AI Agents?

Table 1: White Collar Workforce and Domain Expert Cohort Sizes, 2025–2045.

Figure 1: Mid- and Late-Career Domain Expert Population Will Decline by 80% by 2040.

Perhaps in the coming years, GenAI will be so good as to not require human QA oversight, as judged by shareholders and regulatory bodies. If this happens, accountability will shift from human agents to algorithms.3 Firm leaders and shareholders may not wish to bet the viability of their firms on the hope of cost-acceptable GenAI perfection arriving before they run out of knowledgeable managers to monitor the GenAI.

Likely, firms will need to develop new talent strategies to ensure they have a sufficient talent pipeline of broadly and deeply contextually-knowledgeable process QA talent.

But training this talent will be doubly hard, because the next generation of QA managers will only be watching digital processes rather than living in the processes. The rich, implicit knowledge of today’s mid- and senior-level workforce will have to become explicit, documented knowledge for there to be any quality control over the AI workflow and outputs.4

Mark Zuckerberg on Joe Rogan Experience.

“White collar” is a plastic occupational category. Depending upon the definition, there are between 60 and 120 million white collar workers in the US today. Nevertheless the cohort distributions remain roughly the same and the combined cohort aging out and early career pipeline drying up ratios remain the same.

This has profound implications for regulatory scrutiny, corporate attestations, and, ultimately, accountability. How will a CEO or CIO certify GenAI algorithms’ reliability when the algorithms “know” more about the process than the few remaining managers?

Of course, to the degree that all contextual knowledge can become explicitly documented, then AI can replace the human QA function too, if stakeholders allow that.